Võ Đức Chính

Intern

Mô hình:

Wazuh manager đóng vai trò cấp các policy cho các agent và thu thập raw log.

Wazuh manager đóng vai trò cấp các policy cho các agent và thu thập raw log.

1. Cài Wazuh Manager trên server

Tải file config mẫu của filebeat:

Khởi động wazuh:

Tạo group cho windows agent và linux agent:

Kiểm tra danh sách group:

File config mẫu để cấp policy linux:

Áp agent vào group:

Xóa agent khỏi group:

Kiểm tra agent đã cập nhật config chưa:

Chỉnh cấu hình file /var/ossec/etc/ossec.conf:

2. Cài Opensearch trên server

Tạo file docker-compose.yml:

Tạo file fluent.conf:

Tạo file default.conf:

Sau đó chạy: docker compose up -d

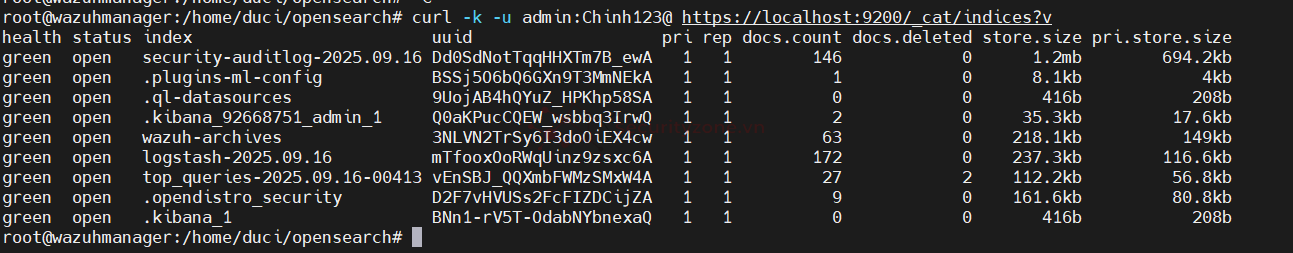

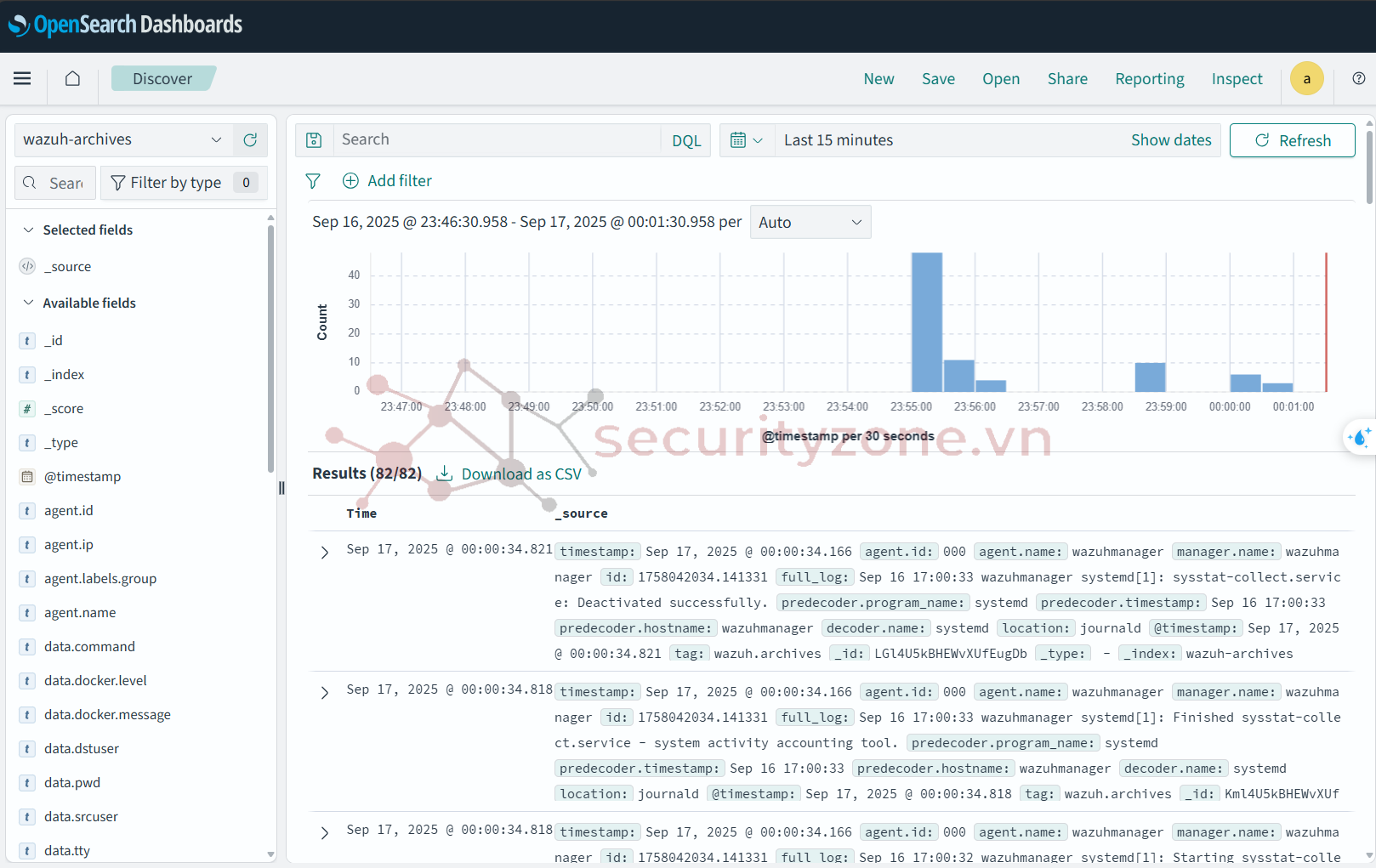

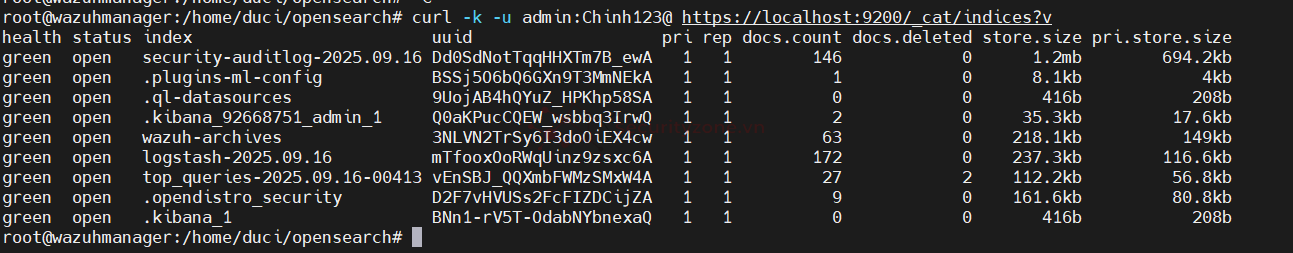

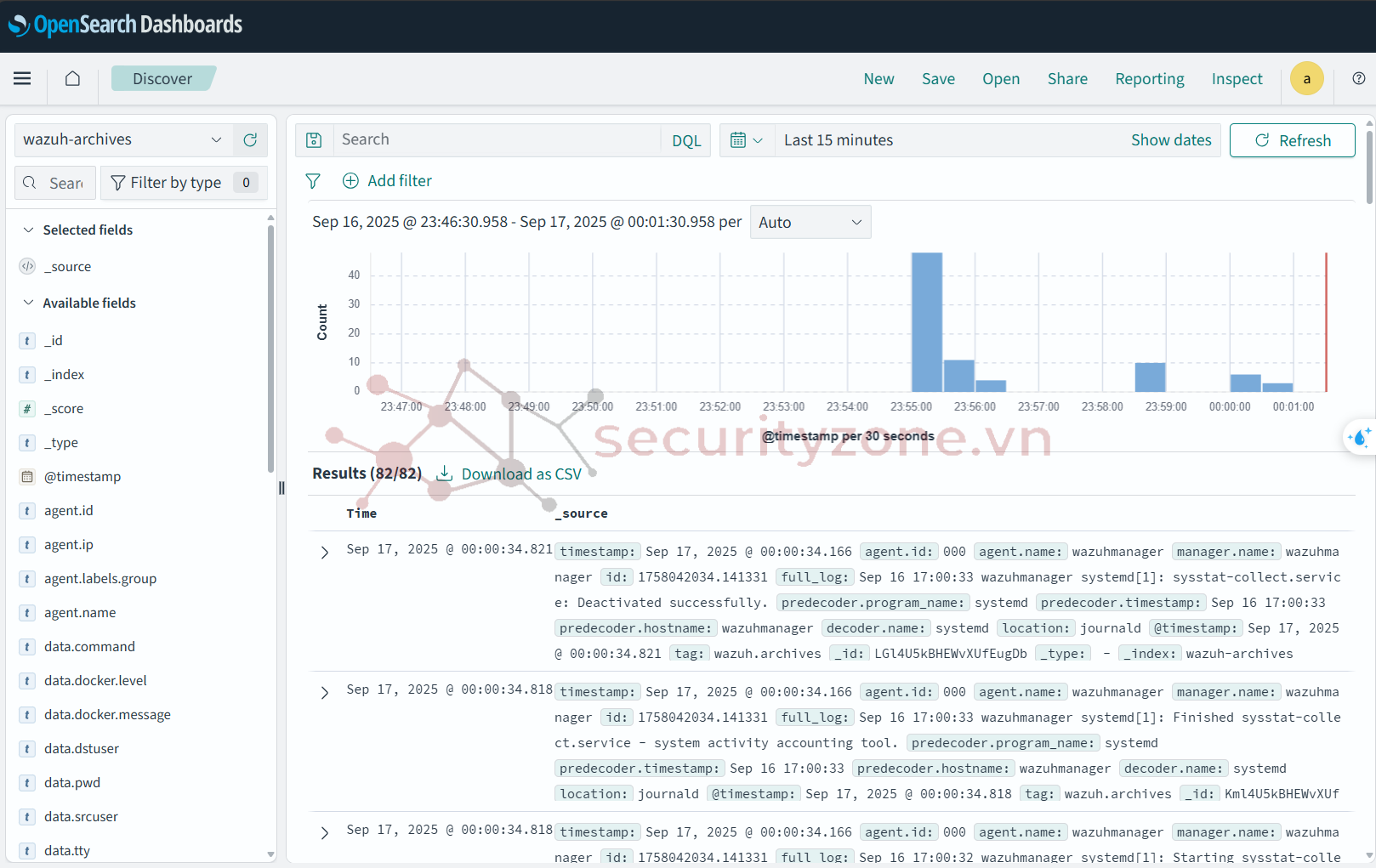

Sau đó log được gửi vào index: wazuh-archives

1. Cài Wazuh Manager trên server

| sudo apt-get install gnupg apt-transport-https curl -s https://packages.wazuh.com/key/GPG-KEY-WAZUH | gpg --no-default-keyring --keyring gnupg-ring:/usr/share/keyrings/wazuh.gpg --import && chmod 644 /usr/share/keyrings/wazuh.gpg echo "deb [signed-by=/usr/share/keyrings/wazuh.gpg] https://packages.wazuh.com/4.x/apt/ stable main" | tee -a /etc/apt/sources.list.d/wazuh.list apt-get -y install wazuh-manager apt-get -y install filebeat |

| curl -so /etc/filebeat/filebeat.yml https://packages.wazuh.com/4.12/tpl/wazuh/filebeat/filebeat.yml |

| systemctl daemon-reload systemctl enable wazuh-manager systemctl start wazuh-manager |

| /var/ossec/bin/agent_groups -a -g windows_agent -q /var/ossec/bin/agent_groups -a -g linux_agent -q |

| /var/ossec/bin/agent_groups -l |

| <agent_config> <localfile> <log_format>syslog</log_format> <location>/var/log/syslog</location> </localfile> <localfile> <location>journald</location> <log_format>journald</log_format> </localfile> <localfile> <location>journald</location> <log_format>journald</log_format> <filter field="_SYSTEMD_UNIT">^ssh.service$</filter> </localfile> <localfile> <location>journald</location> <log_format>journald</log_format> <filter field="_SYSTEMD_UNIT">^cron.service$</filter> <filter field="PRIORITY">[0-6]</filter> </localfile> <localfile> <location>journald</location> <log_format>journald</log_format> <filter field="_SYSTEMD_UNIT">^docker.service$</filter> </localfile> <localfile> <location>/var/log/auth.log</location> <log_format>syslog</log_format> </localfile> <labels> <label key="group">Linux_Agent</label> </labels> </agent_config> |

| /var/ossec/bin/agent_groups -a -i <AGENT_ID> -g <GROUP_ID> -q |

| /var/ossec/bin/agent_groups -r -i <AGENT_ID> -g <GROUP_ID> -q |

| /var/ossec/bin/agent_groups -S -i <AGENT_ID> |

| <alerts_log>no</alerts_log> <logall>yes</logall> <logall_json>yes</logall_json> |

Tạo file docker-compose.yml:

| services: # --- OpenSearch Node 1 --- opensearch-node1: image: opensearchproject/opensearch:3 container_name: opensearch-node1 environment: - cluster.name=opensearch-cluster - node.name=opensearch-node1 - discovery.seed_hosts=opensearch-node1,opensearch-node2 - cluster.initial_cluster_manager_nodes=opensearch-node1,opensearch-node2 - bootstrap.memory_lock=true - OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m - OPENSEARCH_INITIAL_ADMIN_PASSWORD=Chinh123@ ulimits: memlock: soft: -1 hard: -1 nofile: soft: 65536 hard: 65536 volumes: - opensearch-data1:/usr/share/opensearch/data ports: - "9200:9200" - "9600:9600" networks: - opensearch-net # --- OpenSearch Node 2 --- opensearch-node2: image: opensearchproject/opensearch:3 container_name: opensearch-node2 environment: - cluster.name=opensearch-cluster - node.name=opensearch-node2 - discovery.seed_hosts=opensearch-node1,opensearch-node2 - cluster.initial_cluster_manager_nodes=opensearch-node1,opensearch-node2 - bootstrap.memory_lock=true - OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m - OPENSEARCH_INITIAL_ADMIN_PASSWORD=Chinh123@ ulimits: memlock: soft: -1 hard: -1 nofile: soft: 65536 hard: 65536 volumes: - opensearch-data2:/usr/share/opensearch/data networks: - opensearch-net # --- OpenSearch Dashboards --- opensearch-dashboards: image: opensearchproject/opensearch-dashboards:3 container_name: opensearch-dashboards ports: - "5601:5601" environment: OPENSEARCH_HOSTS: '["https://opensearch-node1:9200","https://opensearch-node2:9200"]' networks: - opensearch-net # --- Nginx --- nginx: image: nginx:1.27.4-alpine container_name: nginx ports: - "8080:80" volumes: - nginx-logs:/var/log/nginx - ./default.conf:/etc/nginx/conf.d/default.conf networks: - opensearch-net # --- Fluentd --- fluentd: image: fluent/fluentd:v1.17-debian container_name: fluentd user: root command: > sh -c "gem install fluent-plugin-opensearch && fluentd -c /fluentd/etc/fluent.conf" volumes: - nginx-logs:/var/log/fluentd - ./fluent.conf:/fluentd/etc/fluent.conf - /var/ossec/logs/archives:/wazuh-archives:ro environment: - FLUENTD_CONF=fluent.conf ports: - "24224:24224" - "24225:24225" networks: - opensearch-net volumes: opensearch-data1: opensearch-data2: nginx-logs: fluentd-ui-data: networks: opensearch-net: |

| # ========================= # --- Host Linux --- # ========================= <source> @type forward @id in_forward_linux port 24224 bind 0.0.0.0 tag linux </source> <match linux.**> @type opensearch @id out_os_linux @log_level info include_tag_key true host opensearch-node1 port 9200 scheme https ssl_verify false ssl_version TLSv1_2 user admin password Chinh123@ index_name fluentd_linux logstash_format false include_timestamp true time_key_format %Y-%m-%dT%H:%M:%S.%N%z time_key time <buffer> flush_thread_count 1 flush_mode interval flush_interval 10s chunk_limit_size 8M total_limit_size 512M retry_max_interval 30 retry_timeout 72h retry_forever false </buffer> </match> # ========================= # --- Host Windows --- # ========================= <source> @type forward @id in_forward_win port 24225 bind 0.0.0.0 tag win </source> <match win.**> @type opensearch @id out_os_win @log_level info include_tag_key true host opensearch-node1 port 9200 scheme https ssl_verify false ssl_version TLSv1_2 user admin password Chinh123@ index_name fluentd_win logstash_format false include_timestamp true time_key_format %Y-%m-%dT%H:%M:%S.%N%z time_key time <buffer> flush_thread_count 1 flush_mode interval flush_interval 10s chunk_limit_size 8M total_limit_size 512M retry_max_interval 30 retry_timeout 72h retry_forever false </buffer> </match> # ========================= # --- Wazuh Archives JSON --- # ========================= <source> @type tail @id in_tail_wazuh_json path /wazuh-archives/archives.json pos_file /fluentd/log/wazuh-archives-json.pos tag wazuh.archives <parse> @type json </parse> </source> <match wazuh.archives> @type opensearch @id out_os_wazuh_json @log_level info include_tag_key true host opensearch-node1 port 9200 scheme https ssl_verify false ssl_version TLSv1_2 user admin password Chinh123@ index_name wazuh-archives logstash_format false include_timestamp true time_key time time_key_format %Y-%m-%dT%H:%M:%S.%N%z request_timeout 60s <buffer> flush_interval 10s chunk_limit_size 2M retry_max_interval 30 retry_timeout 72h </buffer> </match> |

| server { listen 80; listen [::]:80; server_name localhost; access_log /var/log/nginx/host.access.log main; location / { root /usr/share/nginx/html; index index.html index.htm; } error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } } |

Sau đó log được gửi vào index: wazuh-archives

Bài viết liên quan

Được quan tâm

Bài viết mới